|

|

FWIW, this change might have been in conjunction with nVidia too. I say this because, ever since the past few nVidia (Studio) driver updates (starting about four months ago or so), whenever I update the nVidia driver, Windows will temporarily - until I reboot - show 3 display monitors, even tho I only have two. And my 2ndary monitor (Intel) will temporarily become my Primary.

(I'm guessing I get a count of three because the GTX-1050 Super will support two monitors. The next time I update the video driver, I'll try to remember to use the Identify tool in Windows to see if one of my two monitors reports as #3.)

As I reported elsewhere in this forum, ever since Windows Hardware scheduling and Intel's DCH video driver, I've been able to force PD 18 to use my nVidia for hardware encoding via the Windows Graphics Setting. Since PD 18 hasn't changed (via a patch), this shows that something else has changed in the Windows ecosystem.

|

|

|

Quote

Probably irrational euphoria, never should SVRT utilize 100% CPU resources, basically it’s not being utilized then.

Well, to be more accurate on my part, it's the part of SVRT where PD has to re-encode parts of the video that don't fit the majority profile I've chosen. (As when I merge an old Intro/Outro recorded at 720p with a new recording of a movie taken at 1080p.)

|

|

|

Quote

No matter how fast is the CPU or the GPU, at some point some other latencies will become the bottleneck.

True, and I've posted about bottlenecks before in other Forum Indexes here.

Just popping in to say that I recently upgraded from an i3-8400 to an i5-9400, and after doing so, SVRT (for the videos that I edit) used nearly 100% of all six cores. (This in PD 18, 1080p.) So I'm a happier camper.

(As it goes to latencies, my memory speed jumped from 2400 to 2666 MHz with the CPU change (although CL wait state jumped from 15 to 16). And the i5-9400 has more cache, and something called "unified" cache, which is supposed to be better than what I had before. Hard to believe that these small changes eliminated memory bottlenecks. But the data is what it (they) are.)

|

|

|

A contributor here suggested to me in a PM that my question is better asked over at DigitalFAQ.com or Videohelp.com.

Which I will do.

But just for completeness, after sleeping on my proposed solution of 48 fps, it occurred to me that a frame rate of (Update) 24 fps should have captured all the frames in a 24 fps movie.

So that got me thinking about b reference frames. In my screen recorder utility, I had had "Look-ahead" enabled. Apparently the Look-ahead doesn't look closely enough, and did not provide enough reference frames for my short segment of quick motion.

So I went back to 24 fps for my screen capture and turned off "Look Ahead." I also increased the Max B-frames to (Update) 4. And that catches the fast motion in my little test.

Update: Nevertheless, for really fast motion, like a waterfall scene, I find that I have to record at 30 fps to avoid stuttering.

I don't understand why that is.

|

|

|

I think that the answer is: 48 fps for a film movie.

I did a bunch of testing on a short clip of an Alfred Hitchcock movie, where there was some fast movement that caught my eye when it jumped on the big screen TV.

I tried screen capture frame rates from 24 fps (per my post above, to "match" the frame rate of the movie) to 60 fps, the highest that my screen capture utility allows.

I also lowered my monitor's fresh rate from 75 Hz to 60 Hz. (No difference that I noticed.)

It turns out that 24 fps is too slow, in that it sometimes misses some frames of a movie.

I made a short video demonstrating this. If the attachment won't open here, then this is a link to stream the video.

60 fps works, but is too fast in that there are a lot of duplicate frames. And, of course, 60 fps creates a larger file than 48 fps.

I am hypothesizing that the frames of an Internet streamed movie are not synced to anything at the receiving end. And so I've settled on 48 fps, which is 2 x 24 fps, to over sample the film, in order to catch all the frames.

There are still sometimes some duplicates this way. But not as many as at 60 fps capture. And no lost frames at 24 fps, per my short video. (I suppose it's a toss up if, perception-wise, an occasional duplicate frame causes stutter, vs a missing frame causing jerk.)

As a side note, I tried using PD 18 to do my frame by frame testing. I remember someone here saying that PD allows "frame by frame" editing.

If it does, I could not get that to work. What I found was that PD 18 gave me second by second editing. So I had to use another Editor that allows shuttling between frames.

|

|

|

This question is primarily for JL_JL, since he seems to understand this stuff. And I was going to PM him this question. But I thought perhaps others might be interested in his answer. (I vaguely remember asking this before, but if I did, can't find it here. And I keep vacillating back and forth as to what is the right answer.)

I record old Noir movies that are either played on TV or, more recently, streamed over the web.

For the ones played on TV, I use whatever frame rate Windows Media Center gives me in its recorded file. I have no control over that.

For those streamed over the web, I use a screen capture program to record the movie playing full screen from my monitor.

I have my screen recorder set to 30 fps. (In part because of the 60 Hz refresh rate of my monitor. Although my new monitor refreshes at 75 Hz.)

Yesterday I was checking to see how much compression I could get away with in HEVC. (I am at a ridiculous 1000 kb lately, although the image quality seem okay to me. (Viewing later on a 52" TV screen.))

I was stepping through a high motion segment of a (film) movie and I noticed a bunch of "smearing" of small, but detailed, areas, like eyes, mouths.

(I can post a screen shot if anyone wants to see.)

I initially thought that I had too much compression. But changing the compression to ridiculously high Quality settings (that is, not much compression) didn't change the smearing.

That got me thinking that perhaps the smearing was caused by me not matching the original source material (film) in my screen capture.

Now, here's where I get confused. Many of the later movies that are streamed are streamed from a Blu-Ray disc. (In fact, they must all be digitized because it's not like a Projectionist has to mount a film when you call it up on the web to watch it.)

I read that Blu-Ray has a frame rate of 24 fps to match (film) movies.

I presume that the way that Blu-Rays are made is that a special machine steps through a (film) movie a frame at a time, scans a frame, and moves on to the next. (As opposed to playing the movie on a screen and recording it from the screen, as is done on TV with old movies. (Which I presume needs telecine and produces extra (duplicate) frames.))

If so, then by me recording a 24 fps movie at 30 fps, it seems to me that I would be getting a telecine type effect, where I am getting an extra frame in occasionally?

I have compared two screen recordings taken at 24 and 30 fps and it seems to me that the 24 fps is slightly better. But then it's not a double blind test and I could just be fooling myself.

Is there a definitive answer as to what frame rate I should be using for screen capture of film movies streamed over the Net?

|

|

|

Since I like solving a good puzzle, is there a way that you can file-share a small project that is repeatably causing your machines to crash so that we can try it out for ourselves? I know there's not much solace in telling you that PD 18 rarely causes BSOD's for others. (I've never had Win10 BSOD on me - but then, I don't work with High res, big files or complicated editing.)

As you acknowledged, a BSOD usually means a hardware problem - especially in this day and age, where Windows 10 is fairly good about protecting itself in software. But I wouldn't rule out a "software" issue, as below.

The fact that two machines, independent of each other, are crashing is a puzzler.

But what is not independent is that you own both of them. Are they are on the same network/Internet connection at your house? (Same modem? Same router? Same IOT appliances?)

If yes, then my hunch is that you've picked up malware. Especially since, on your second, new machine, you said that everything was working okay for a few days. And then it BSOD'd.

So something changed in those few days. Presumably it wasn't hardware. (And I am assuming, since you worked in the repair industry, that you don't have both your boxes tightly caged up where they can't breathe. Overheating would cause a BSOD for hardware reasons.)

To test my hypothesis, I suggest doing a complete bare metal reinstall of Win 10 on either machine. With your ethernet cable unplugged. (Which means that you won't be asked to create an MS account. You'll be air-gapped.)

To do it perfectly clean, I suggest installing Win10 to a leftover hard drive that you have lying around, that has been Boot & Nuke'd.

Only install Windows and PD 18. Nothing else. (Well, except Comodo's free firewall, if needed below. Set it for "Custom" on the Firewall rules. It is very good at stopping threats. Even the US spook agencies complained how good it is.)

(If you don't have Win10 install media, you can download a Win10 iso for free. If you need certain drivers to get Win10 to work on your box, then USB them over only.)

I can't remember if PD needs on-line activation to work the first time. If it does, then plug your Ethernet cable in just for that time. (Try the trial version. Or, I don't know if CL still offers it, but at one time they were offering a free, slimmed down version. I suggest trying those so that you don't trip your license use on your purchased copies.)

Let's see what happens.

(If it still happens after a clean install, then I wonder how the line voltage at your house is? There has to be something shared by both computers that is causing this problem.)

|

|

|

I add this comment for completeness:

Last week I did a Host Refresh of Win10 (that is, an in-place update, keeping my files and apps) from 19042.572 to .685.

After I did this, my trick of getting HWA to work on PD 18 stopped working.

Then I noticed that during the Refresh, Windows had dropped back to an older (standard) driver for my UHD 630, instead of the October 2020 (DCH) release that I had installed when my trick worked. (The old driver was from 2019.)

I updated the UHD driver to 27.20.100.8935 and now I can get nVidia HWA to work in PD 18 again.

So, I had initially said that it was a later version of Win10 (20H2) AND Hardware-accelerated GPU Scheduling that allowed PD 18 to see the nVidia. But it might be that the only thing that one needs is a later UHD driver.

Or perhaps one needs all three (latest Windows, later UHD driver, and H-a GPU Scheduling). Or some permutation of the three.

As they say in textbooks, I leave this exercise to the reader.

|

|

|

|

It sounds like PD is switching in to Non-Realtime Preview, since he can see the sound bars move, but doesn't hear anything.

|

|

|

Thanks for your research and good to see the correlation.

Yeah, I figured a simple sine wave was the easiest test to do.

I will resist the tempation to raise the audio level in my TV Movie recordings then.

|

|

|

So I went to youtube and found a few "Test your Hearing" videos. I assume that they were recorded professionally. (Although I have no basis to assume that.) In this video, the DBFS meter in OBS showed a steady -12 dB below the red 0 dB, with the VU mark about 3 dB less, at -15.

Since ppl on the web say to set your VU to -18 dB, this particular video seems like a reasonable benchmark.

There was another similar video, which said it was recorded at "-6 dB," and the indicators in OBS seem to correlate well with that one too, being about 6 dB lower than the first video.

So I imported the first video into PD. The audio waveform showed about 50% of Full Scale in PD.

It seems to me that the horizontal slider in PD's audio settings, labled Audio Gain, is simply a Quick and Dirty way to adjust the audio level of a track. It might be that 100 (which is +50, or 2x, more than normal) correlates to +6 dB.

When I used PD's vertical VU slider instead, adding +11 dB brought me just below clipping on the waveform.

Considering that -11 is close to the -12 dB in OBS's DBFS, this behavior in PD seems believable.

And so I am going to conclude that the TV movies that I record were recorded with their levels at -18 dB on a VU scale. (Remember that these are old movies from the 40's and 50's, when all they had were real rms VU meters. (As opposed to peak meters of today.))

And then I am adding about 6 dB to them when I SVRT them.

Which I probably don't have to do, since the movies sound fine when I play them direct from the TV signal without my audio boosting when I record them for playback in PD.

|

|

|

You know how there are Color Bars for calibrating displays?

Is there a video that has a "standard" volume level (like, max allowed by spec?) in a single tone for calibrating purposes?

|

|

|

I record TV Movies using OBS Studio. During recording, the Volume Meter in OBS shows levels just touching the red.

But when I import videos into PD, their audio isn't close to topping out on the waveform display. I always have to slide the sideways mixer control to the max for that channel.

I don't hear any clipping when I play the videos on the TV. So apparently I am not overdoing the levels.

But I don't understand the discrepency between VU indications.

|

|

|

I've been away from the forum for a while, so perhaps someone has reported this already. (Since Hardware-accelerated Scheduling has been available for six months now.)

I just upgraded to Win10 2004. I also enabled GPU Hardware-accelerated Scheduling, a new feature in 2004. And I have the latest Studio driver 457.30 from nVidia. (This driver supports Hardware-acceleration. I don't know if it's the first to do so or not.)

I changed Graphics Setting for PD 18 to High Performance and, to my great surprise, I can now encode using the Nvidia! That's with my 2nd monitor still hooked up to my Intel UHD 630, same as it's alaways been.

(These aren't the only changes I've made to my system recently. I also updated the Intel UHD driver.)

This is with 18.0.2725.0.

|

|

|

|

Tnx. Please don't bother. Someone else will be able to inform us if it's been fixed.

|

|

|

Hmm... there was a patch (fix) to PD 18 365 on June 29. I expected similar for Perpetual. But I don't get a notice when I run the program. Does this mean that PD 18 isn't going to get it?

Update: Apparently not. I just saw the "What's New" in PD 19. There it says:

Also new to users updating from PowerDirector 18 to 19 (already available in PowerDirector 365).

- Utilize the Title Reveal Mask feature for more creative title text animation.

- Import 8K Videos with the support of an AMD VCN2.0 GPU.

- Encode AAC 5.1ch audio with MKV and MP4 files.

- Modify the graphics colors of motion graphics titles.

- Attach shapes created in the Shape Designer in Motion Tracker.

- Export SRT subtitle files with or without SubRip style formatting.

- Adds priority customer support feature within program

And @optodata, did they fix the Monitor enumeration problem, where users with Intel iGPU's can now use their nVidia's for HA?

|

|

|

Thanks. Now that you explained it from a math viewpoint, it does sound like a "Duh" moment on my part.

I suppose I'll have to get a feel for what a good BW is for HEVC encodes.

I'll have to do some more comparisons, but it seems that the HEVC done by the Intel GPU is fuzzier than the HEVC done by nVidia.

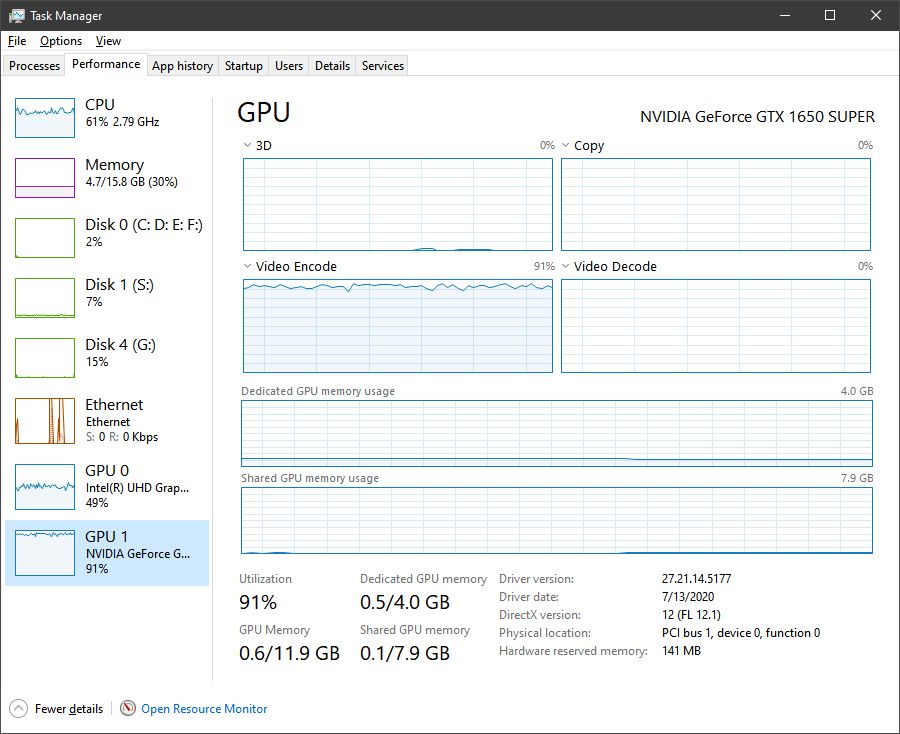

Oh, BTW, I did the HEVC conversion test with the GTX-1650 Super. Like you, I got a 5.6:1 ratio of time to encode to length of video.

Here's a screenshot from TaskManager.

|

|

|

So, Jeff, a dumb question:

I converted a 1080p H.264 to HEVC using PD. I accepted the default profile for 1080p HEVC. (Since, when I use Profile Analyzer, it always wants to "convert" to H.264, and doesn't give me any advice on HEVC settings.)

The original video has a BW of 30,000 Mb/S. The PD default profile for HEVC is 11,000.

I did the conversion and the new video is noticably blockier than the original.

So I thought, 'Okay, I'll set the BW for the HEVC to 30.,000."

I did the conversion again, and, to my surprise, the file size of the H.265 is exactly the same as the H.264!

I thought that HEVC was supposed to deliver approx half the file size for the same quality/BW video?

Am I doing something wrong? Or not understanding?

When I did the same conversion using HandBrake, it too lowered the BW of the converted video - lower, to 9,000. The file size was about a third of the original, and the video looks good to me. I notice that HandBrake presets a "comb" filter for the conversion. Whereas PD doesn't. Could that be the difference?

(Can discuss PM if you want.)

|

|

|

Good to know.

I would run your test, but am still waiting for CL to patch the problem where my iGPU is blocking me from using my nVidia. (What appears to be a Display Enumeration problem, as Optodata discovered.)

But if sufficiently motivated, I can disable the iGPU in my BIOS and run your test.

|

|

|

I put this here as example of what can be done with hardware.

The screen shot below was taken during a conversion from H.264 to H.265 NVEC using Handbrake.

It is not editing, so not apples to apples when comparing to PD.

Still, it's interesting to see how all my hardware is being used to almost its maximum potential.

For example, my CPU is running at about 2/3'rds. I presume it is doing demux/mux. My Intel iGPU (UHD 630) is decoding the video. My nVidia GTX-1050 Super is maxed out doing the encoding.

It took 20 minutes to convert a 1.5 hour long 1080p video. Better than 3 to 1.

|

|

|