If you have the same problem, please add your reports and comments. Here comes the description, and then my tears...

[Prelude]

Since introduction of the newer generations of Intel processors it is common that the laptop systems contain two graphics solutions: the intnernal Intel HD Graphics and the external NVidia high performance graphics. These are installed independently, normally with Intel as a primary option but, NVidia driver allows to set the NVidia card to be the default one.

This switch may be set to "Auto", or to the "Preferred" settings. Also, one can launch certain app with a selected (in the right-click context menu from your desktop) graphics adapter. NVidia control panel also allows to set the preferred choice on per-application basis, or system-wide.

With this said, I wanted to underline the fact that this configuration and systems are quite common and become progressively more common today. So, it would be only natural if we consumers expect for developers (i.e. Cyberlink) would account for this fact, and NOT find themselves surprised when a user reports "strange configuration". It is not uncommon anymore!

[Where problem appears..]

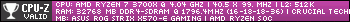

What I have now on my new laptop that uses the like configuration, and running a brand new Power Director 16 and the Windows 10 with all the patches and updates correctly installed and configured with all the side-by-side codecs, Intel/NVidia drivers etc., etc., including all the runtimes like dot.Nets, VBs, Microsoft C++, Java, Flash as well as Direct X, Vulcan, OpenGL and who knows what else, runing smoothly and bug free,

Lots of RAM, fast SSD, fast pprocessor, and powerful NVidia card,

And, what is important, perfectly fine running other manufacturer CUDA-enabled apps, like Adobe, MediaCoder, WinX etc., etc., all are running fine and all of them can be configured to start either with Intel or with the NVidia adaptor. And they really do - I use monitoring to know if my CUDA app really loads my GPU and how. So I don't just assume - I made many tests before I wrote this.

[NOW. THE PROBLEM]

Power Director does not work with my NVidia card. Only once it launched with the "non OpenCL" option when the hardware accelerated effects, render and previews option was greyed out, i.e. not switchable on, anyway. After that, no matter how I tried, it just have the "Enable Open CL" option. Enabling it applies Intel optimizations which may speed up the supported effects by certain -10-15%, and employs Intel hardware H.264/H.265 encoding, but this is all!

Never I was able to detect any NVidia GPU activity, even when rendering, even if I launch the Power Director 16 with the right-click context menu and explicitly selecting "Launch with High-performance NVidia adapter".

[What I tried..]

The power Director also ignores system-wide setting the priority for NVidia Graphics card. It always launches with Intel HD graphics.

I also cannot set the NVidia solution as the default on per-application basis in the NVidia control panel, because guess what - while all the othe apps listed there have this choice between Intel HD and the NVidia High-Performance graphics cards - ...

[The outcome..]

The Power Director is defaulted to the "Integrated Graphics Intel solution", and this is not even clickable, this is the only option!

[Why is that?]

My guess is that Power Director 16 reports to the switching driver that it is not compatible with NVidia card driver and hence, there is no choice possible. Always Intel HD Graphics.

[Tears and Pain..]

Now, you can imagine my total disappointement from all these investements... My pain is almost cosmic!

Why why why you Cyberlink did that to me? Why not include the list of supported cards just like the Adobe did, so one could at least manually add the missing card? But CUDA interface is pretty standard, all the other apps are working just fine, so why the Power Director 16 fails? It even restricts me from trying by the disabled selection!

[Tech details]

My GPU cores are GK107. These should be supported.

[What may calm me down..]

I am requesting Cyberlink to fix this, because I cannot change the card!

I would also like here some official note on this issue. Cmon guys from Cyberlink, tell me, why this failure? Why it is there only with Power Director? What is the use of this TrueVelocity if everything is so slowwwww...

[How you community may help?]

Share your thoughts and expeirence! If you have the same conf and the same type of problem, please submit your pain too.

Maybe then Cyberlink will disover there is not everything okay.

[My thanks..]

To all readers and participants!

Alya (me)

[Update 1]

Just installed the 2127 beta patch.. nothing changed. Again, on the first lauch the first option changed from "OpenCL" to "Hardware supported effects, preview and rendering.." but again it was greyed out. On the next launches it again changed to "OpenCL".. nothing changed in NVidia Control panel - Intel HD graphics defaulted, no choice possible.

[Update 2]

Browsed system registry for NVidia interface keys.. found that there actually is OpenCL support for my NVidia card - OpenCL64.dll is registered. Thus, hypothetically, Power Director would use OpenCL for hardware acceleration through NVidia solution. Indeed, why not?

But OpenCL interfaces to the Intel HD graphics only. Because there is only "Intel Optimized" labels on the effects, and, what is more important, there is no GPU activity (lowest clocks, zero load) while rendering this effects or encoding. Sorry guys..

[Update 3]

Found that the hybrid video solution (2 switched graphics adapters) is governed by NVidia Optimus technology. This one is known to have problems with proper detection of video modes by an application. The technology is supported on Windows 7 and up, so my Windows 10 should support it. But here might be a hint for developers - where to look. After introduction of this technology, some games are known to stop being able using NVidia solutions and defaulted to Internal Intel HD cards..

[Update 3.1]

Regarding the Optimus thechnology. I discovered that at least officially my laptop manufacturer does not support it. Therefore, I cannot choose in my BIOS which card I am using. It is always Integrated Solution by default there. Also, I only have 3 display ports (in total, including eDP laptop panel, HD and old style VGA), not 6 (would expect from Optimus).

I checked the Registry and found that NVidia Optimus is installed, but is it used (?).

So maybe the problem is really related to the Optimus technology - more specifically, the Power Director 16 might expect it is there, and NVidia driver say it might be there, but it might be not there! Hence the mess. If anybody could provide me with more detailed method how to detect this Optimus beast for sure, I would test it more specifically.

After all, somehow other developers resolved this situation, if any - thur software is working with both the graphics adapters, Power Director 16 is not.

This message was edited 6 times. Last update was at Oct 19. 2017 02:38

![[Post New]](/forum/templates/default/images/doc.gif)